Inappropriate content and media moderation was once considered an unsolvable challenge. They said it couldn’t be solved – but Bynn has proven otherwise.

Bynn’s advanced AI-driven moderation solution automatically filters and flags harmful or inappropriate content across all media types, keeping your platform safe and your community thriving. With Bynn, you can effortlessly shield users from violent imagery, explicit sexual content, hate speech, scams, and other offensive material in real time – all while maintaining a seamless user experience. In today’s digital landscape, effective moderation isn’t just about checking a box for compliance – it’s fundamental to user trust, community health, and your brand’s reputation.

Striking the right balance between moderation and free expression is crucial, as overly strict moderation or regulatory changes can sometimes result in fewer users on a platform.

One API for All User Generated Content Types

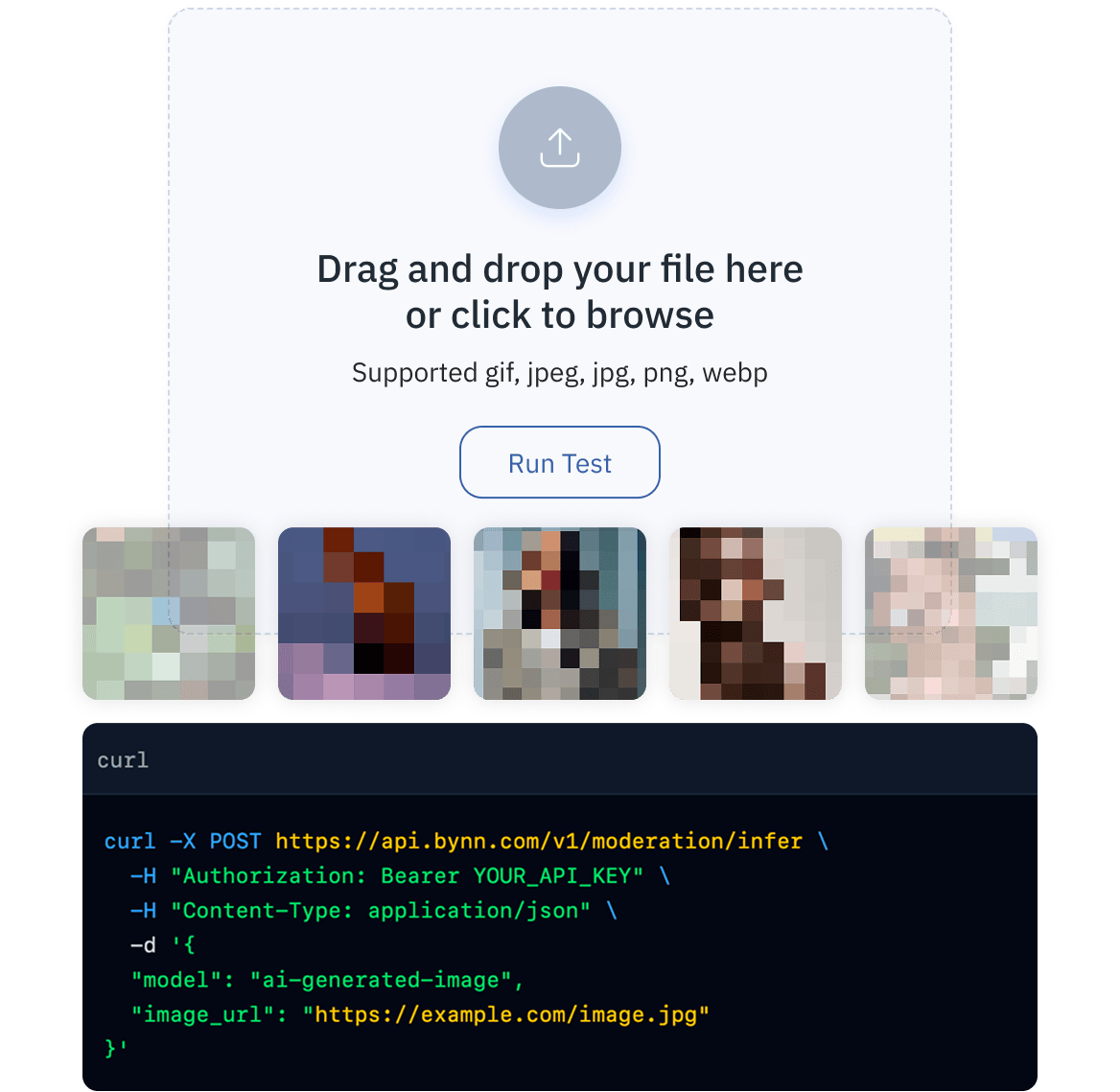

Bynn offers one unified API to moderate every kind of content. Our solution processes images, videos, text, audio, and more through a single endpoint, simplifying your integration and accelerating deployment. The API is designed to process and moderate all content posted by users, regardless of format, ensuring comprehensive coverage for user-generated material. No need to juggle separate tools for different media formats – Bynn handles multi-format moderation with ease. The API returns structured JSON metadata and plain-language tags in real time, so you get immediate, actionable insights. With a single request, you can analyze an image or a video frame for graphic violence, scan a chat message for hate speech, or even check an audio clip for profanity.

Our system uses advanced algorithms to identify content that violates guidelines, providing accurate detection of inappropriate material. Having a single moderation interface means fewer integration points to manage and one consistent format for all your moderation logs and analytics. The result is a streamlined moderation pipeline that catches inappropriate content before it ever reaches your users. Bynn's API is adaptable for use across different platforms, ensuring consistent moderation standards in various online environments.

.svg)

Next-Generation

Vision–Language

Artificial Intelligence

At the heart of Bynn’s moderation platform is a cutting-edge vision–language model – a single large AI system that understands both visual content and textual context together. Informed by the latest academic research in content moderation and social media analysis, this next-generation model can interpret complex scenarios involving images paired with text (for example, an image meme with a caption) and provide nuanced, plain-language explanations for why something is flagged. By combining deep visual analysis with natural language understanding, Bynn’s multi-modal AI ensures no context is missed – whether it’s detecting sarcasm in a caption that accompanies a photo or spotting hidden meaning in an image’s details. This context-aware approach significantly reduces moderation mistakes: our AI won’t mistakenly flag a harmless joke meme as harassment, and it can catch dangerous coded references in image-text combos that simpler filters might overlook. The result is smarter content moderation that adapts to the full context of each post, comment, or upload. Advanced AI moderation tools like Bynn's can shape social media by influencing what users see and how they interact with content, ultimately impacting user experience and engagement.

Trusted by Leading Platforms

Many of the world’s leading social networks, marketplaces, and online communities trust Bynn to safeguard their user-generated content and enhance their brand safety. Bynn is trusted to moderate millions of social media posts and other online content every day. We are an AI-first company with a proven track record in fraud detection and content authenticity, which means you get a moderation partner that’s battle-tested. Bynn’s infrastructure currently processes millions of moderation checks per day – scaling effortlessly to billions of API calls per month – all with enterprise-grade reliability. In total, our platform analyzes several billion pieces of content every month, continuously feeding our AI with fresh data and insights.

From social apps and forums to marketplaces and gaming platforms, Bynn adapts to each industry’s unique needs. Content moderation practices and policies can vary widely across different

social media platforms, influenced by their unique community guidelines and legal frameworks, which Bynn's adaptable system can accommodate. With our roots in security and compliance, we bring unparalleled expertise in authenticity and trust to content moderation.

Our models recognize over 100+ content categories (and counting), from nudity, hate symbols, and extremist propaganda to spam links and phishing attempts. This rich metadata enables you to customize the system to your platform’s specific rules and definitions of “inappropriate content.” Whatever you’re looking to filter out – be it graphic violence in videos, personal data in text, or covert signals in images – chances are, Bynn can detect it. And we do it all while preserving low latency and high accuracy, so moderation never slows down your app or website.

.svg)

Moderation Across All Media Types

No matter what type of user content you need to moderate, Bynn has you covered. Bynn supports moderating content through a variety of strategies, including pre moderation, reactive moderation, and distributed moderation, to ensure community guidelines are enforced effectively. Social media platforms often use a mix of pre-moderation, post-moderation, reactive moderation, and distributed moderation to create a safer online environment.

Content moderation is typically done through a combination of automated tools and human moderators; human moderators provide a nuanced understanding of context, cultural nuances, and subtleties that machines currently cannot. Meeting legal requirements for content moderation is also essential to ensure compliance with laws and maintain platform integrity. Our comprehensive platform applies consistent moderation standards across images, videos, text, and audio. Here’s how Bynn tackles each content type:

.png)

Visual media is often where the most jarring inappropriate content appears – from violent imagery and graphic gore to sexually explicit photos or hate symbols. Bynn’s image and video moderation tools use state-of-the-art computer vision to scan every picture and video frame for policy violations. We can detect 100+ classes of visual content risks (such as various levels of nudity, sexual activities, violence severity, presence of weapons, drugs and drug use, blood/gore, self-harm indicators, and more). Our models are contextually aware – they distinguish, for instance, between nudity in an art sculpture versus pornography, or medical imagery versus graphic violence. They also recognize hateful insignia, extremist flags, and dangerous memes or logos.

When Bynn flags an image or video, it provides descriptive labels (like “graphic violence” or “adult nudity – severe”) along with confidence scores, so you know exactly why something was flagged. You can set thresholds to automatically block the worst offenses (e.g. pornographic or extremely violent content) Bynn’s video moderation scans footage frame-by-frame, so even a brief flash of disallowed content in a longer video won’t slip through – and it can pinpoint the exact timestamp of any violation to help your team respond precisely. Such content is subject to a range of content management actions, including removal or deletion, visibility reduction, warning labels, account penalties, and legal escalation. With Bynn handling visual moderation, you protect users from ever seeing disturbing images or videos while allowing normal content to flow freely.

.png)

Inappropriate content isn’t limited to visuals – text posts, comments, and messages can be just as harmful. Bynn’s text moderation engine analyzes user-generated text in real time to intercept hate speech, harassment, explicit sexual language, violent threats, bullying, scams, and other toxic or forbidden content. Our natural language processing (NLP) models are trained on vast datasets and can understand nuance, including slang, abbreviations, and coded language. This means the system can catch subtle insults or veiled profanity that simple keyword filters would miss. It can even recognize when users try to evade filters by disguising banned words with deliberate misspellings or symbols. Bynn’s text moderation also detects solicitation of prohibited activities (like buying drugs or arranging underage drinking), as well as the sharing of personal private information (emails, phone numbers, addresses) that might violate privacy rules. Each piece of text is evaluated and tagged (for example, “harassment/bullying” or “extremist ideology”) so you can take

appropriate action – whether that’s automatic removal, flagging for review, or adding a user-visible content warning.

It’s important to ensure that moderation practices do not prevent important information from reaching its intended audience, especially when dealing with sensitive topics such as harm reduction or drug education. Content moderation practices can inadvertently suppress valuable discussions, including those related to harm reduction, so careful calibration is essential.

Multi-language support allows you to moderate a global user base, with models that can be extended to different languages and local slang. We can even adapt our models to your community’s unique slang or industry-specific jargon, ensuring nothing slips by due to unfamiliar phrasing. With Bynn, your community discussions stay civil, respectful, and safe from malicious or harmful speech.

.png)

Audio and voice content have become integral to modern platforms – from voice notes and podcasts to live-stream audio and in-game chat. Bynn’s moderation capabilities extend seamlessly into the audio realm as well. Our system performs real-time audio moderation by transcribing speech to text and then analyzing that transcript for the same spectrum of issues as text content. If someone speaks hateful slurs in a voice chat or uses graphic sexual language in a podcast, Bynn will flag it just as it would if the content were written. We also analyze audio for other unwanted signals – for example, explicit violent sounds or even suspicious silence where speech is expected (which could indicate someone trying to circumvent voice detection). The transcription process is fast and accurate, enabling near-instant detection of issues in live audio streams or uploaded files.

Bynn's audio moderation capabilities are especially valuable for messaging apps, where voice notes and live audio are common. Social media platforms and messaging apps have become major channels for the dissemination of information, including harm reduction discussions, making robust moderation essential to support safe and constructive community interactions.

This automated approach empowers you to enforce standards in live audio rooms (like voice chats in a game or social app) and recorded messages with the same rigor as text or images – a feat that would be nearly impossible with human moderators alone. By covering voice, Bynn ensures inappropriate content doesn’t slip through via sound.

.webp)

Moderation in Action

Imagine a user posts a video on your platform that unexpectedly contains a burst of graphic violence. Before any human moderator even notices, Bynn’s AI vision model detects the gore in that video frame and automatically flags or removes the content, preventing other users from being exposed. Meanwhile, elsewhere on your platform, a troll might be harassing others with coded insults – but Bynn’s NLP catches the veiled harassment and flags those messages instantly. In both cases, the problematic content is dealt with in real time, and your human team only needs to review the flagged items as needed. This is the power of having Bynn as a 24/7 virtual moderator: harmful posts are caught before they can cause damage, and your community remains safe and enjoyable. No surprise controversies, no PR nightmares – issues get handled proactively before they explode.

Advanced Content Moderation Analysis Tools

Beyond the standard categories of nudity or profanity, Bynn provides a suite of advanced detection features to tackle emerging threats and nuanced scenarios. Our platform goes the extra mile to keep your community clean and secure. Bynn’s advanced detection features are informed by academic research in content moderation and online safety, ensuring our solutions are grounded in the latest scholarly analyses and best practices:

- AI-Generated Content & Deepfake Detection

- Spam and Scam Prevention

- Evasion and Policy Circumvention Detection

- Restricted Content & Minor Protection

- Image Quality Assessment

- Profile Photo & Identity Verification

Harm reduction strategies aim to reduce the harms associated with drug use through education and support, but these strategies face challenges on social media due to content moderation policies. The removal of harm reduction content from social media can have significant consequences for users seeking information on safer drug use practices.

Intuitive Moderation Dashboard

Staying in control of your moderation process is easy with Bynn’s dashboard. Our web-based moderation console gives you a real-time view of all incoming content and flags. Through the dashboard, your team can review flagged posts in context – seeing the image, text, or audio snippet alongside the reason it was flagged – and take action with a single click (approve, remove, or escalate). You can customize filters and sensitivity levels through this interface, enabling or disabling specific content classes as your policy evolves.

The dashboard also provides analytics on moderation activity: track trends like spikes in hate speech or drops in spam after policy changes, and get insights into how content is being moderated over time. All moderation actions are logged for accountability. You can even allow user appeals by using the dashboard to review and restore content if needed. With its intuitive layout and search functionality, even non-technical staff can easily navigate the moderation queue, adjust settings, and maintain a high quality bar for your community. Team members can even filter the queue by violation type or user account to quickly zero in on specific issues and prioritize what's most urgent. Bynn’s dashboard ensures that while AI does the heavy lifting, you remain in full control of your platform’s safety.

.png)

Why Choose Bynn for Moderation?

Choosing Bynn means choosing a smarter, safer, and more efficient way to moderate content. Here are some key reasons businesses worldwide partner with us for inappropriate content and media moderation:

.webp)

Fast & Scalable

Bynn’s moderation API delivers decisions in an instant – typically in just fractions of a second – even under enormous workloads. Our cloud infrastructure scales automatically to handle spikes in content volume, so you can moderate millions of posts, images, or streams per hour without missing a beat. Whether you're a fast-growing startup or an established platform with billions of monthly interactions, Bynn provides reliable performance at any scale. We operate a globally distributed infrastructure to ensure minimal latency worldwide. Plus, as your platform grows, you won’t have to keep expanding a human moderation team – Bynn’s AI handles the increased load efficiently and cost-effectively.

Highly Accurate & Context-Aware

Use Bynn’s modern tools to collect client documents quickly and securely, Built on state-of-the-art machine learning models (honed by our in-house experts and continuous improvement), Bynn’s content classifiers achieve industry-leading accuracy. We minimize false positives and false negatives by analyzing context, not just keywords or pixels in isolation, which means fewer mistakes and missed issues. Each moderation decision is consistent and auditable – you receive detailed labels and confidence scores for every piece of content. Over time, our feedback loops and regular model updates ensure the AI keeps learning from new examples, maintaining high precision even as trends and slang evolve. Our dedicated R&D team continuously refines our models with real-world data, so you’re always a step ahead of new content challenges. In fact, tests show Bynn’s models catch more violations with fewer false alarms compared to other solutions. the back and forth sending multiple emails, improving the client experience and reducing manual errors.

Easy Integration & Customization

We know time is money for developers. That’s why Bynn’s solution is designed for quick integration. With comprehensive documentation, intuitive SDKs (for web and mobile), and a developer-friendly REST API, you'll be up and running with just a few lines of code. You can customize which moderation categories to activate and adjust thresholds to fit your community standards. Bynn seamlessly fits into your workflow – whether you need to automatically remove content, flag it for human review, or blur it with a warning, our flexible API has you covered. And if you ever need guidance, Bynn’s support team is ready to help you make the most of our solution.

Privacy-First & Compliance-Friendly

Keeping user data secure is a core principle at Bynn. All content analysis is performed by our algorithms; no human moderators ever view your users’ private images, videos, or messages. We don’t store your content after processing, and we never share it with third parties. Our platform is fully GDPR-compliant and built with privacy by design, so you can deploy automated moderation with confidence. Our systems also meet industry-leading security standards (like ISO 27001 and SOC 2) to protect data at every step. By quickly filtering out illegal or harmful material (like extremist content or child exploitation) consistently, Bynn also helps you meet legal obligations and avoid the risks of unchecked user content.

.webp)

For forward-thinking companies, the time to implement robust content moderation is now – don't wait for a crisis or scandal to force action.

All it takes is one shocking post slipping through to tarnish your brand’s reputation overnight. Beyond ethical concerns, failing to moderate can carry hefty financial risks – from legal fines to advertiser boycotts – which far outweigh the investment in proper moderation. Proactive moderation is the best defense. A clean, well-moderated community also keeps users engaged and gives advertisers confidence that your platform is brand-safe. Bynn’s AI moderates content in milliseconds – far faster than any human team – and it never needs a break, operating 24/7 to safeguard your platform. It can even scale on-demand to handle viral surges of content, so moderation quality never falters during peak times. By letting our powerful multi-modal models take on the heavy lifting, your human moderators can focus on the toughest edge cases and strategic policy decisions, instead of slogging through endless routine posts.

.webp)

Build the Perfect Moderation Pipeline

Implementing Bynn’s inappropriate content and media moderation solution helps you create a safer, more engaging platform from day one. Our tools empower you to:

Make sure photos and videos are appropriate, safe and authentic

Automatically filter out graphic violence, pornography, deepfakes, and other harmful media before anyone ever sees them.

Block spammers, scammers, ads and other malicious activity

Prevent bots and bad actors from abusing your platform by detecting duplicate content, scam links, unsolicited advertisements, and fraud attempts.

Enforce your terms of use and community guidelines

Catch rule violations in real time (from hate symbols to banned content categories) and keep your community civil and welcoming for everyone. This proactive approach also protects your brand’s reputation and helps ensure regulatory compliance.

Empower your business with Bynn’s inappropriate content and media moderation and join the many innovative platforms already relying on AI to protect their communities. With Bynn as your partner, you can safeguard your users and your brand at scale – and do it all with confidence.

%20(1).webp)